Scaling CRUD Applications

One would think developing a typical Create-Read-Update-Delete (or, in IT circles, a CRUD) program is simple and basic for any system, with variations to cater to the complexity and scale of data it needs to handle.

Like in all of our enterprise apps, an example being our brand-new SaaS offering for donations management called JomKasi.org, CRUD programs aren’t so simple after all.

JomKasi.org provides a multi-tenanted system for NGOs to publicize and collect small-ticket donations via an integrated payment gateway interface.

One such CRUD operation involves curating campaigns for aggregating donations made by visitors to the site. We expect the number of visitors to be high during weekends and public holidays.

Thus, we have to consider a few things.

- The mechanism of creating donation records that require system-generated unique keys.

- The campaign page will be a hotspot on the website — the way we store and retrieve this large amount of data directly influences the performance and scalability of the system.

- The campaign page can be updated by multiple personnel within the NGO at the same time, causing the Lost Update Problem.

Donation Creation

Donation creation requires a unique key since there are no natural keys in the source data. Relying on the database table UNIQUEID mechanism would cause a bottleneck against the table, since these IDs are generated upon insertion of the record.

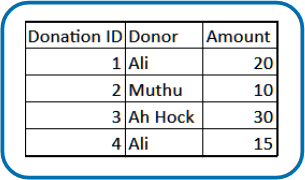

These unique IDs are needed for later operations; retrieval, updating or deletion. (Remember that there is no natural key in the original donation data)

The normal UNIQUEID generated by the database is an ordered sequence, starting from an initial seed value, e.g. 1, and continues on as illustrated below in a table of 4 donation records.

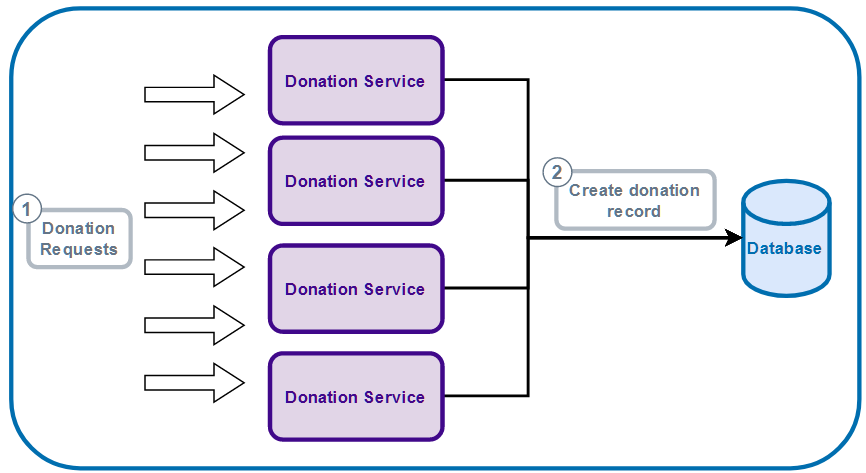

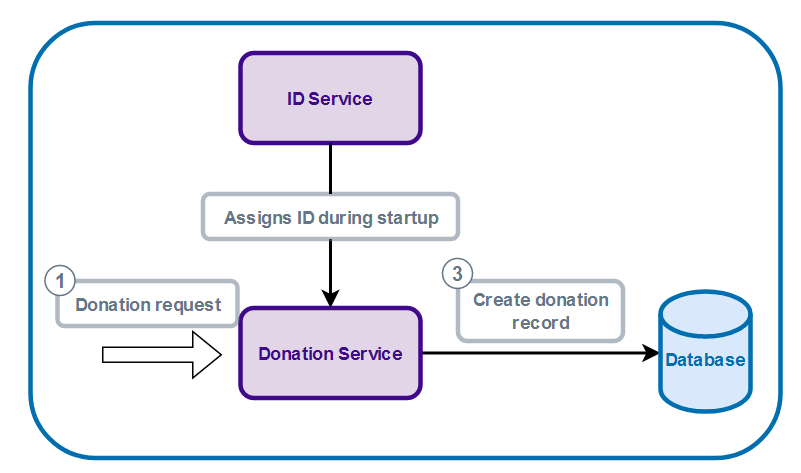

JomKasi.org uses the microservice architecture so we can scale out the request traffic grows. Because we must support horizontal scalability during high volume donations, a bottleneck is created at the database since these replicated services would need to wait for it to generate the ID sequentially before being able to create the record in the table. The following illustrates the overall idea.

Multiple donation requests being served by multiple instances of donation service, connecting to a single database

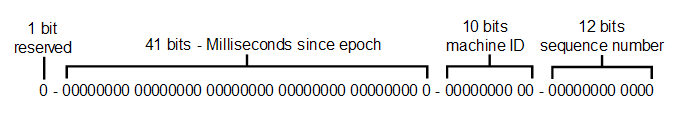

We needed a solution that can provide a uniqueness property but with a turnaround in microseconds instead of tens or hundreds of milliseconds. This solution is the Snowflake ID, originally created by Twitter in 2010. This is a method where the service that receives the donation creation request, creates a 64-bit number as the ID for the record. This ID consists of an extra 1 bit for reserve (not used), 41 bits for timestamps, 10 bits for machine id and 12 bits for sequencing.

Of course, we could always use a UUID as an alternative, but it wouldn’t 100% guarantee a unique number, and more importantly, it does not have sequential property. Also, there would be a chance for UUID to have conflict with one and another. Therefore, it would mean we still need to write custom code to handle this behavior i.e. to regenerate the ID in conflict situations, and then we can only check for conflict then a database insert returned an ID conflict error. Again, this would still subject the service to the same bottleneck as before.

With the Snowflake ID method, we can guarantee uniqueness, and separate its generation from the database. We also have an id which we can expose to outside with less context of the data (using the standard database UNIQUE IDs would expose what our current total record and IDs of already available records).

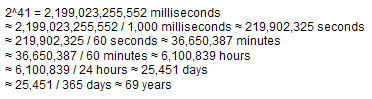

The 41 bits allows us to store the milliseconds difference between current time since epoch, usually 1st January 1970. But because 41 bits can only be used to store up to roughly 69 years, people usually change the epoch to a much more recent time, like the day that this Snowflake ID was added to the system.

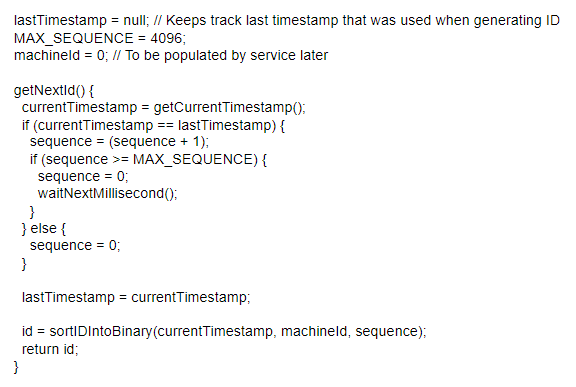

The 10 bits is used to store the machine id where the donation request processing was done. Using 10 bits allows us to store 1024 (2^10) unique machine id. In our case since we are running microservices, we use them as service id instead of physical machine id, where each running service will have its own id. Finally, the 12 bits to store the running sequence number that goes up to 4096 (2^12). In each millisecond in a particular service, the service will start the sequence number at 0. When a request is received, we check if the current sequence number is over 4096. If not, we increment the sequence by 1, then let the service process the request.

This allows us to have up to 1024 services at the same time to receive donation requests, and each of these services can create up to 4096 IDs within a millisecond without conflicting. And if the sequence is over 4096, the solution is pretty simple. We just need to wait for a millisecond to pass and then the sequence number is reset to 0, and we again have another 4096 sequence numbers to use.

If you’ve read this far, congratulations, and you may be asking “how does one set up the machine ID”” For starters, at a machine level, you can easily do this because each one usually has a different configuration, same as having different types of services to process the donation. But what about multiple instances of the same service, which is essentially the same program configuration?

What we did was create a separate service to assign this machine id, which we will call ID Service for now. This service keeps track of all the machine ids that have been doled out to all requesting donation services. A donation service would connect to this ID Service at startup, requests a machine id, and continues on like normal. When the donation service has to generate a unique donation ID, it just uses the particular machine ID received earlier during the startup sequence.

There’s some coupling between our donation service and this ID Service, but it happens only during startup, so it has negligible performance impact.

So, after creating the record, we next describe the considerations when reading data under scale.

Reading Campaign Data

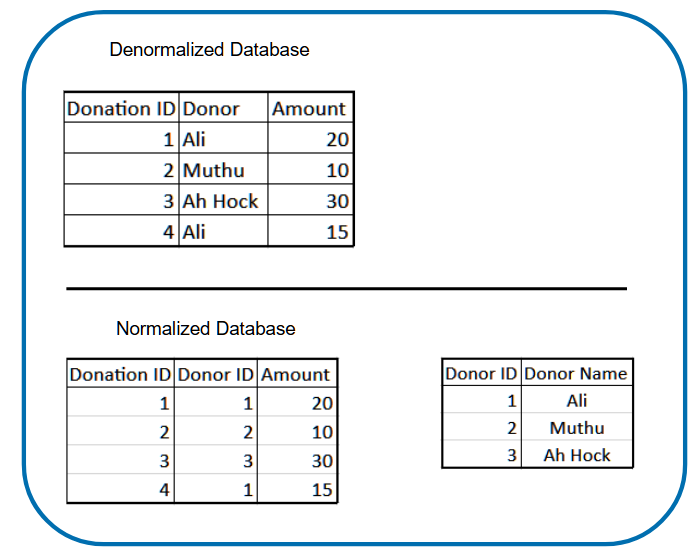

In typical database modelling, we usually normalize the tables to make it easier for updates or deletions on repeating values.

As an example, the illustration below, in a denormalized database, if we wanted to change the donor name from “Ali” to “Abu”, we would need to look up each donation record in Donation Table and change each record where the donor name “Ali” occurs. Obviously, this is not good for performance.

However, with the normalized one, you just need to update it once on the much smaller Donor table. Of course at this scale, it would be easy. At a bigger scale where there are up to hundreds of millions of records, we would need to re-consider the approach.

In normalized databases, the cost is in the retrieval. Fetching data from multiple tables would need table joins, which is costly in terms of CPU. This is even costlier when you consider that sometimes such data won’t change often, but are heavily read — meaning you are doing joins for data that are quite static.

In our case, it is our campaign data. Usually campaigns are created once, and maybe updated a few times before they are published to the public. After publishing, they are rarely updated, but can be read by any site visitors, and can also be read by multiple people at the same time. Our solution for this is to store our campaign data in Redis, an in-memory database which acts as our cache. In-memory means that the data is stored in the RAM instead of the secondary memory (HDDs/SSDs), which will increase the retrieval speed. To make it even more efficient, the data stored in Redis is almost ready to be displayed to UI and requires minimal database lookup to fully complete the campaign data.

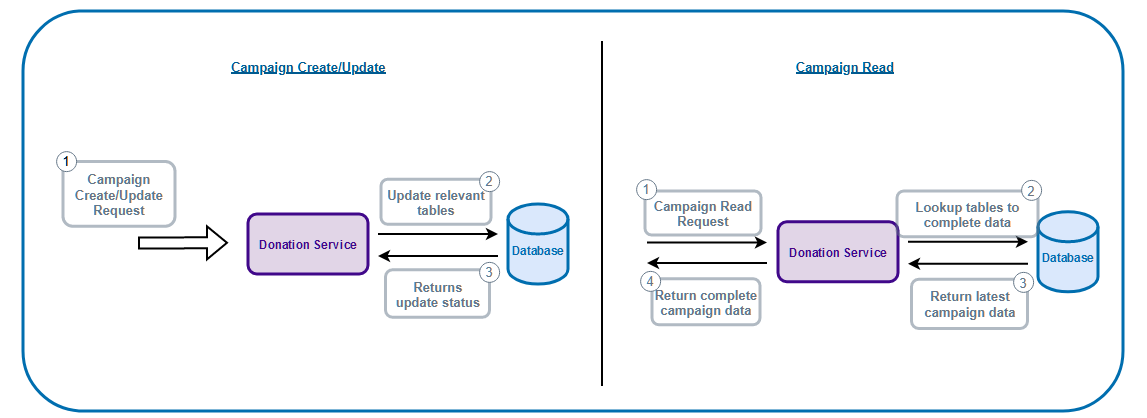

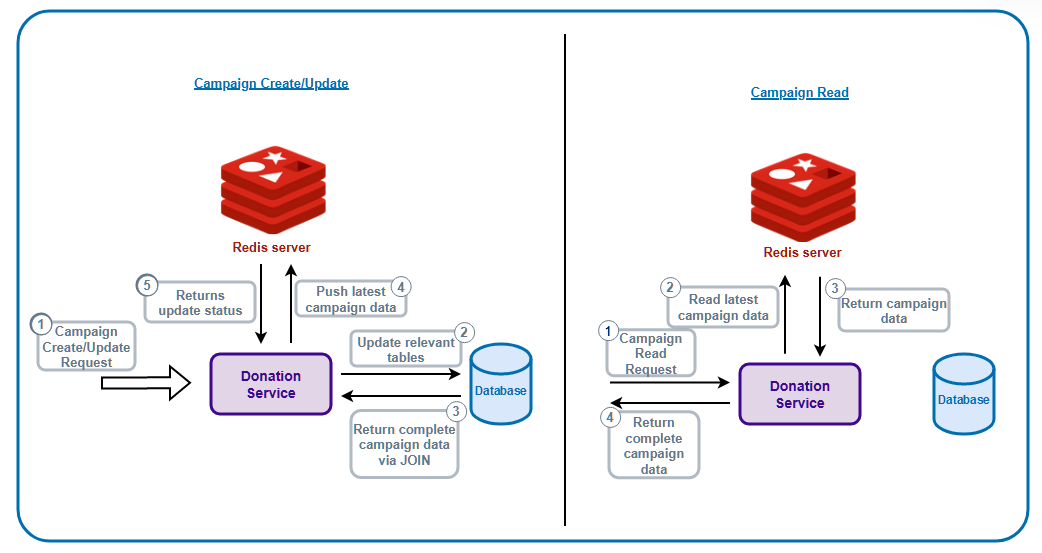

Without Redis, every single read, would require table joins to fetch campaign data

With Redis, table joins are done during update and the result is then stored in Redis. During read, a single query to Redis can get us the complete campaign data.

Then the question is how do we know that this data is the latest one from database? What we did was to replace the existing data in Redis whenever there is an update in the campaign data. There are many ways to approach this, but we went with utilizing a streaming platform to update Redis. We will discuss more about this streaming platform in a later blog post, but the reason for choosing it is because it allows us to update other various components in our system at the same time.

Updating Campaign Data

What about updating campaign data? We need to be aware of the problems associated with multiple users updating the same data at the same time. Since we have a maintenance portal, which allows multiple users from the same NGO to update their campaign info, we wouldn’t want to have “The Lost Update Problem.”

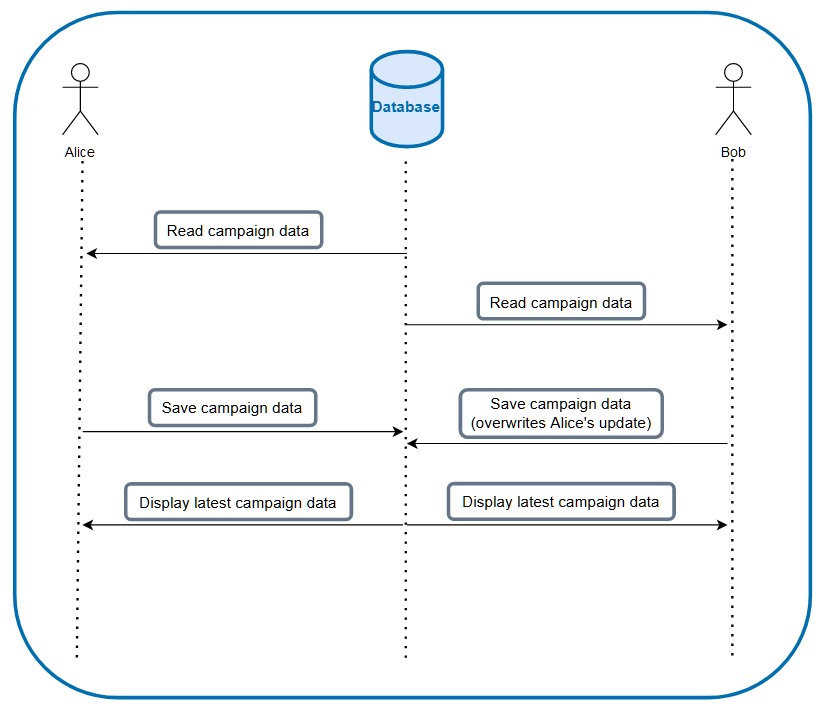

Imagine having 2 users; Alice and Bob, both updating a particular campaign via the maintenance portal from different locations at about the same time. Alice & Bob opens the same campaign page and starts editing. While Alice was editing, Bob also starts editing as well. When both of them finish editing, they save their work — Let’s assume Alice & Bob changed the name of the campaign differently from each other. Because this is a multi-process, multi-access system, there is really no guarantee that the updates will respect the time order of when both users happen to click on the update function.

After saving, the service returns the saved campaign data to the respective UI. Due to the brevity of time between Alice’s and Bob’s saves, Alice may see the campaign data with Bob’s update instead of hers. From Bob’s side, it would look like normal, but from Alice’s point of view, it would look like her update was lost, hence the lost update problem.

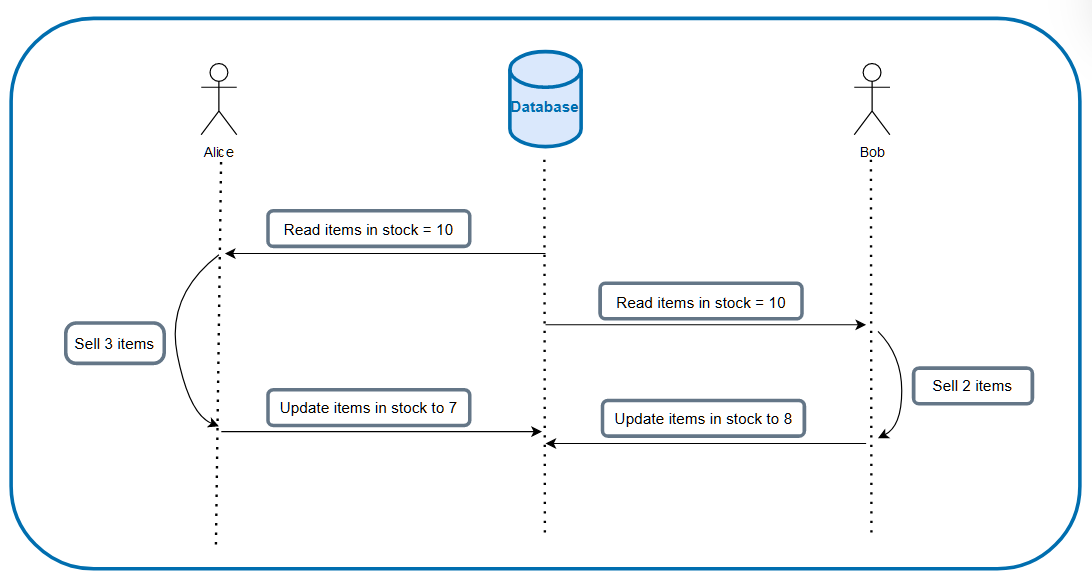

This is one variation of an update where we just replace whatever was in the database previously, so at the very least, the data would still be valid. If the update was one that involves calculation based on the data that it reads off of the database, this would be catastrophic as each update depends on having read the latest record.

Lost update problem happening on a normal update campaign data operation, causing Alice’s update to be overwritten by Bob.

Another example of lost update problem, but because the operation depends on the data that was read in order to update it, lost update problem causes the latest data to be unusable, unlike previous example.

Can you imagine what the effect would be when more complex calculations are involved?

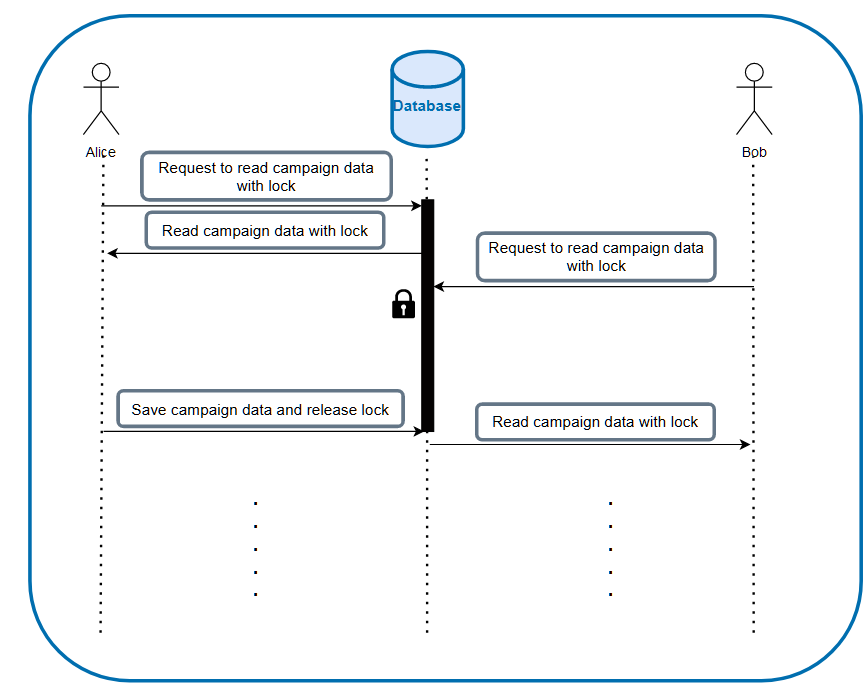

To resolve this, we looked into two methods. The first is pessimistic lock, where we are pessimistic that the data would always be overwritten, so we lock the record in the DB whenever we are doing write operation on the record. While the record is locked, no other service can read, update or delete the data. If there is, they would have to wait for this lock to be released and only after we finish updating the record would we release the record.

After releasing the lock, this however could still result in a lost update problem, in the unlikely scenario that the later update processing manages to catch up to the former update processing (due to having some processing after saving, or latency difference). Alice would still see that her data was somehow overwritten by someone else and gets confused. However, this does address the calculation update, since the calculation would only be applied on to the latest data.

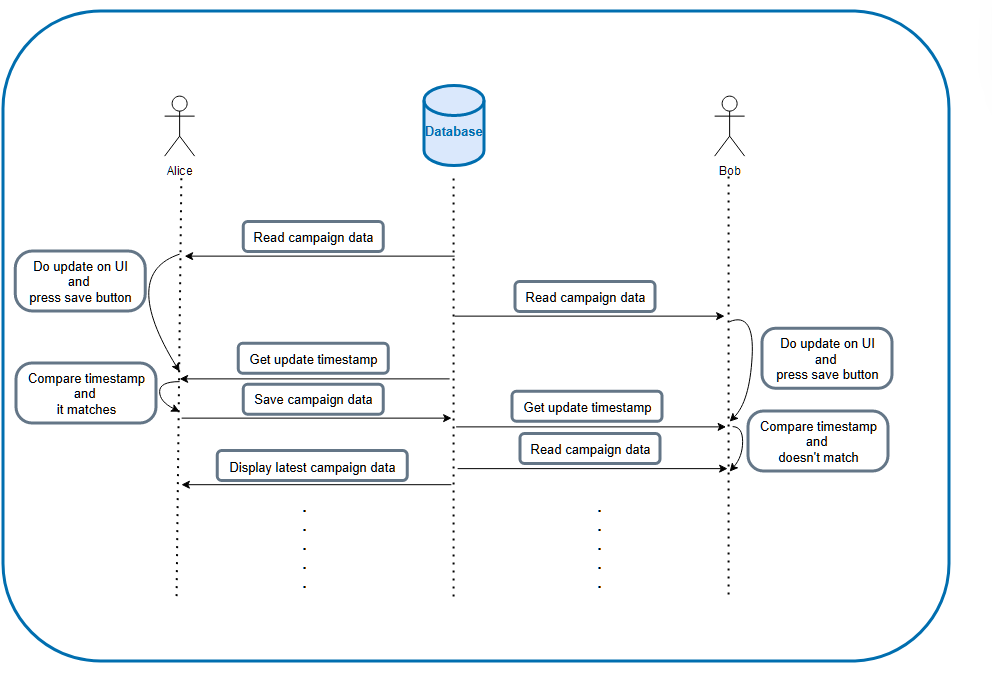

The second method is optimistic lock. In this method, we are optimistic that people wouldn’t overwrite our update. The way we check if there is an overwrite is by checking the update timestamp between the one we obtained before update, and the current timestamp in the database. If it is different, we would return the updated data back to UI so that user can reapply the updates. If it is the same, we just update the record like normal.

In Alice and Bob’s scenario, after Bob presses the save button, instead of seeing the page refresh with his update, he would see a popup saying that his campaign data is outdated and needs to refresh his page. On Alice’s side, she would see her update because Bob wouldn’t be able to save his update until after he retrieve the latest data first.

Pessimistic lock will lock the data on database level, preventing other processing from accessing it, until the lock is released at the end of the operation.

Optimistic lock is not done via locking the record in database, but by checking some value in the record that helps determine the whether we have the latest version.

Deletions also suffer from the Lost Update Problem. This is because in the end, both Updates and Deletes involve applying their ‘changes’ on to a single record. If a change can be done to a record without checking that it is doing it on the latest one, this problem will happen, since the cause of the problem is applying change on to a record without making sure they are referencing the latest version of the record.

Conclusion

So, for high record creation in the DB, we resolved it by deviating the ID generation into the service level instead. This allows multiple record creation at once without bottle necking at the database level because of ID generation. For reading infrequently changing data, we store the data in Redis during updates, and read from Redis instead of database, which fastens our read operations. To overcome lost update problem, we looked into pessimistic locking and optimistic locking, and decided to go with optimistic locking instead. With this, users won’t find themselves in the “Lost Update Problem” when updating campaign data.

To wrap up, even though it seems easy to make a simple CRUD service, at a larger scale, the complexity increases significantly since you would need to start to think about much more scenarios, since usually, the more complex your services are, the more scenarios can pop up.

0 Comments